If you like what you see with Autopilot (now the recommended way to use GKE), and want to run it at scale, there are a few things to know when setting up the cluster, and scaling your workload.

Cluster Creation

To run a large Autopilot cluster, it’s advisable to do two things: 1) create the cluster with private networking (while not compulsory, public IPs are in short supply, and you may run into quota issues with public networking at large scale). 2) provision a large enough CIDR range for your nodes as this can’t be changed later (/13 to really future proof the cluster).

Bundling this advice into a creation command, you get the following:

CLUSTER_NAME=ap-scale-test5

REGION=us-west1

VERSION=1.24

gcloud container clusters create-auto $CLUSTER_NAME \

--release-channel "rapid" --region $REGION \

--cluster-version $VERSION \

--cluster-ipv4-cidr "/13" \

--enable-private-nodes

With private networking, if you need to reach the outside internet (which is likely), you’ll need to also setup Cloud NAT, like so:

gcloud compute routers create my-router \

--region $REGION --network default

gcloud beta compute routers nats create nat \

--router=my-router --region=$REGION \

--auto-allocate-nat-external-ips \

--nat-all-subnet-ip-ranges

Quota

If you have a public cluster, you’ll need to ensure you have enough Compute Engine API “In-use IP addresses” quota, 1 per node. 1000 is currently the posted limit for Autopilot (it can actually stretch higher), so that would be a decent starting point. The internet as a whole is running out of IPs, so it’s really best to use a private cluster, per the above. With a private cluster, you’ll still use an IP for the control plane, but the default quota is enough.

You’ll also need enough Compute Engine API “CPUs” quota. As a rule of thumb, multiply your workload allocatable by about 1.3-1.5 to get the CPU quota number (to account for node overhead).

This isn’t an exhaustive list, any time you think your scaling might be hampered by quota, simply look at the Quota page, and see if any are nearing the limit, and ask to increase.

Cluster Prewarming

Whether you use the Autopilot or Standard mode of operation of GKE, you will have a Kubernetes control plane that itself scales up in size to meet the demands of your cluster. The scale-up event itself can take about 15 minutes, and cause some interruption to the autoscaler adding capacity to the cluster. This can result in a frustrating scaling experience, where the cluster is shown to be “reconciling” in the UI, and things look stalled.

The good news is that this only happens once, so the solution is to pre-warm your cluster once.

We can do this with a short-lived Kubernetes Job. This job simply sleeps for an hour, then exits. Set the resource size, and parallelism (replica) count to match your expected high-water mark for the cluster, and run it once. You’ll then have a “pre-warmed” cluster, ready to handle scale events. Here’s an example to create 1000 pods of 250m vCPU (250 vCPU total allocated capacity).

apiVersion: batch/v1

kind: Job

metadata:

name: balloon

spec:

backoffLimit: 0

parallelism: 1000

template:

spec:

priorityClassName: balloon-priority

terminationGracePeriodSeconds: 0

containers:

- name: ubuntu

image: ubuntu

command: ["sleep"]

args: ["36000"]

resources:

requests:

cpu: 250m

restartPolicy: Never

Deploy the job, like so:

# Deploy the prewarm job

kubectl create -f https://raw.githubusercontent.com/WilliamDenniss/autopilot-examples/master/scale-testing/prewarm-job.yaml

# Monitor the rollout

kubectl get pods -w

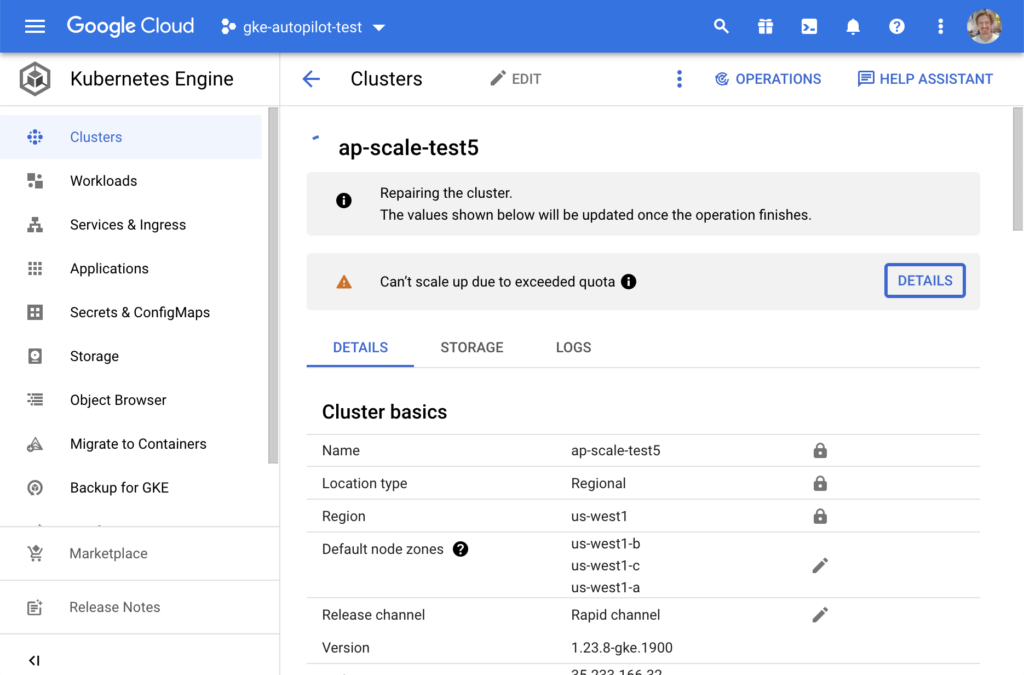

During this pre-warming step, if you visit the UI, you’ll likely see a message that the cluster is repairing. What is actually happening is that the control-plane is being scaled up. You may also hit quota issues during this step (monitor the Quota page to check), now would be a good time to raise them.

Capacity Reservation

If you performed the above two steps, you should have a cluster that can handle scaling. There’s one more thing you can do if you want a really optimal experience, and that is reserve some capacity a few minutes ahead of your scaling event. You can use the Balloon Job technique to do this.

Summary

By combining these 3 steps, at particularly the first 2, you should be well set up for success in scaling a large workload. You might feel that Autopilot could ideally be a little more “auto” here, which is fair—rest assured that the team is working to improve this experience. For now, these hopefully-simple steps can set you up for success.