Did you know you can now run any service mesh on Autopilot? That’s right! Even though Autopilot is a fully managed Kubernetes platform which by nature means you don’t have full root access to the node, that doesn’t mean you’re limited in what service mesh you can install.

How does it work? Service Meshes require the NET_ADMIN Linux permission. While NET_ADMIN isn’t a super high privileged permission, it has occasionally been the source of CVEs, so to keep things safe, the permission is off by default. For those who want to run their own service mesh though, now you can use it. Just add --workload-policies=allow-net-admin when creating a cluster (CLI only for now), or at any time update the cluster as follows:

gcloud container clusters update $CLUSTER_NAME \

--workload-policies=allow-net-admin

Let’s take this for a spin, and install LinkerD. Note that if you tried to install linkerd in the past, you would get an error like:

$ linkerd install | kubectl apply -f -

Error from server (GKE Warden constraints violations): error when creating "STDIN": admission webhook "warden-validating.common-webhooks.networking.gke.io" denied the request: GKE Warden rejected the request because it violates one or more constraints.

Violations details: {"[denied by autogke-default-linux-capabilities]":["linux capability 'NET_ADMIN' on container 'linkerd-init' not allowed; Autopilot only allows the capabilities: 'AUDIT_WRITE,CHOWN,DAC_OVERRIDE,FOWNER,FSETID,KILL,MKNOD,NET_BIND_SERVICE,NET_RAW,SETFCAP,SETGID,SETPCAP,SETUID,SYS_CHROOT,SYS_PTRACE'."]}

Requested by user: '<>@gmail.com', groups: 'system:authenticated'.

“GKE Warden” is the admission controller for Autopilot that is preventing NET_ADMIN by default. Now we have a solution though, update the cluster with --workload-policies=allow-net-admin per the instructions above, and then you can follow the LinkerD getting started guide to get LinkerD!

$ linkerd install --crds | kubectl apply -f -

$ linkerd install | kubectl apply -f -

$ kubectl get pods -n linkerd

NAME READY STATUS RESTARTS AGE

linkerd-destination-5fc849744d-9tc6h 4/4 Running 0 4m18s

linkerd-identity-6f7ccc55f8-ftqqb 2/2 Running 0 4m19s

linkerd-proxy-injector-5c99787d5f-t8lf7 2/2 Running 0 4m18s

Once it’s setup, you can run

$ linkerd check

linkerd-existence

-----------------

√ 'linkerd-config' config map exists

√ heartbeat ServiceAccount exist

√ control plane replica sets are ready

√ no unschedulable pods

√ control plane pods are ready

√ cluster networks contains all node podCIDRs

√ cluster networks contains all pods

× cluster networks contains all services

the Linkerd clusterNetworks ["10.0.0.0/8,100.64.0.0/10,172.16.0.0/12,192.168.0.0/16"] do not include svc default/kubernetes (34.118.224.1)

see https://linkerd.io/2.14/checks/#l5d-cluster-networks-pods for hints

In my case, I got an error (shown above), and needed to update my clusterNetworks field in the LinkerD config. The reason is that GKE now uses a public /20 network range for services (that it re-uses in every cluster to save you IPs). Edit with

kubectl edit configmap linkerd-config -n linkerd

and append the additional CIDR range

clusterNetworks: 10.0.0.0/8,100.64.0.0/10,172.16.0.0/12,192.168.0.0/16,34.118.224.0/20

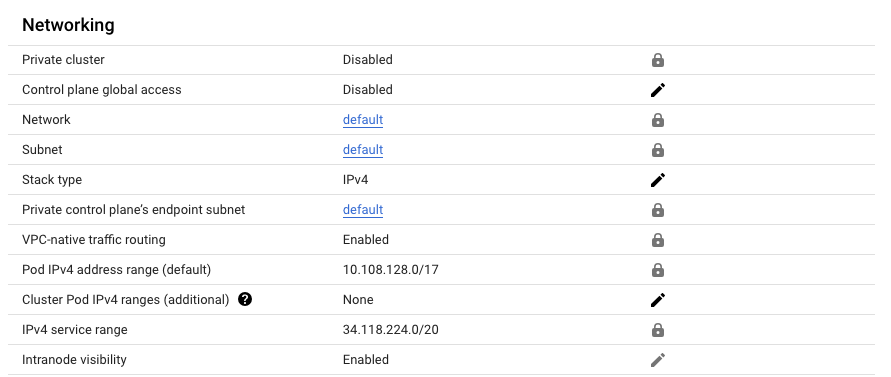

This is a static range, but if needed you can find this network range in the cluster info settings, like so:

Figure 1: Network ranges used in a default Autopilot cluster, including the IPv4 service range that I needed to add

With LinkerD configured, and passing the checks I can now install the example app

Figure 2: the LinkerD demo app Emoji Vote

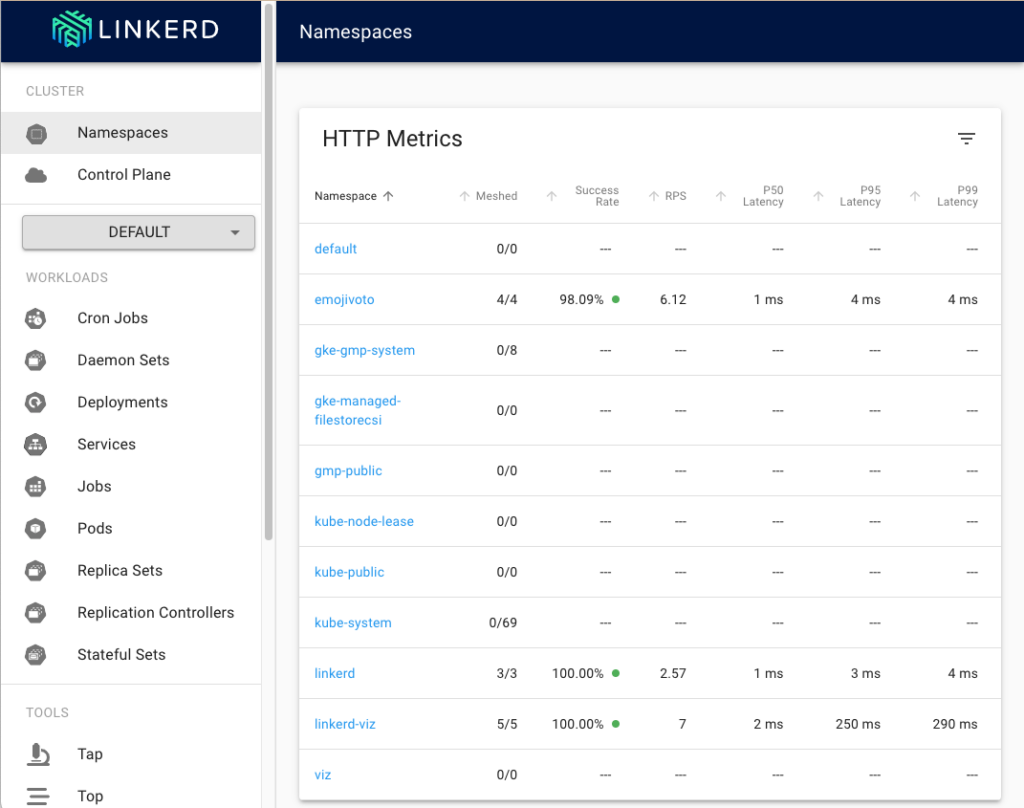

and visualization dashboard. Neat!

Figure 3: LinkerD Visualization Dashboard running in Autopilot

I personally believe the combination of Autopilot being a fully managed platform with the wide compatibility and flexibility to run various workloads from GPU training jobs, to configuring custom service meshes makes it the ideal platform to run your workloads.