Did you know that you can now add Pod IP ranges to GKE clusters? Pods use a lot of IPs, which in the past forced you to compromise. Do you allocate a lot of IPs to the cluster allowing for growth while reserving a big group of IPs, or do you allocate just a little to conserve IPs but risking the need to recreate the cluster if you expand.

Compromise no more. When creating GKE clusters, you can assign a small range, and add supplemental ranges later. For GKE Autopilot clusters it’s especially easy as there are no additional node configurations to worry about after you add the new range (you can also add ranges for node-based clusters, but you need to create new new pools to pick up the new ranges).

Trying it out

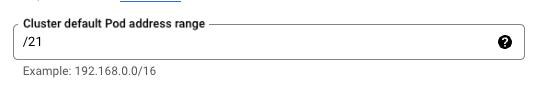

Let’s take it for a spin to build confidence in the system! I’m going to create an IP-constrained cluster in Autopilot mode to see how easy it is to expand. When creating a cluster, the smallest Pod IP range you can give is a /21, so let’s use that. Here’s what it looks like in the UI in the networking settings:

Or, in the command line:

CLUSTER_NAME=wdenniss-ip-constrained

REGION=us-central1

gcloud container clusters create-auto $CLUSTER_NAME \

--cluster-ipv4-cidr "/21" \

--region $REGION

Now, schedule a workload with a large replica count

kubectl create -f https://raw.githubusercontent.com/WilliamDenniss/kubernetes-for-developers/master/Chapter03/3.2_DeployingToKubernetes/deploy.yaml

kubectl scale --replicas 1000 deployment/timeserver

WARNING: scaling to 1000 isn’t cheap, don’t try this if you’re on a budget (fortunately, Google is paying for my cluster, so I can run these tests sweat free). If you do run the test, be sure to tear it down once complete.

Autopilot uses 64 IPs per node by default (32 Pods per node), and a /21 has 2,048 IPs. 2,048/64 = 32, so at most we can create 32 nodes.

Sure enough, that’s what happened.

$ kubectl get deploy,nodes

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/timeserver 390/1000 1000 390 9m44s

NAME STATUS ROLES AGE VERSION

node/gk3-wdenniss-ip-constrai-default-pool-5861ae2f-g7z6 Ready <none> 9m56s v1.27.3-gke.100

node/gk3-wdenniss-ip-constrai-default-pool-7f4db38e-16fg Ready <none> 9m45s v1.27.3-gke.100

node/gk3-wdenniss-ip-constrained-pool-3-8399ce45-725z Ready <none> 7m5s v1.27.3-gke.100

node/gk3-wdenniss-ip-constrained-pool-3-8399ce45-ftl8 Ready <none> 6m57s v1.27.3-gke.100

node/gk3-wdenniss-ip-constrained-pool-3-8399ce45-gk5j Ready <none> 7m4s v1.27.3-gke.100

node/gk3-wdenniss-ip-constrained-pool-3-8399ce45-hkds Ready <none> 7m5s v1.27.3-gke.100

node/gk3-wdenniss-ip-constrained-pool-3-8399ce45-jmrb Ready <none> 7m3s v1.27.3-gke.100

node/gk3-wdenniss-ip-constrained-pool-3-8399ce45-r5vg Ready <none> 7m5s v1.27.3-gke.100

node/gk3-wdenniss-ip-constrained-pool-3-8399ce45-r97f Ready <none> 7m5s v1.27.3-gke.100

node/gk3-wdenniss-ip-constrained-pool-3-8399ce45-s96h Ready <none> 7m6s v1.27.3-gke.100

node/gk3-wdenniss-ip-constrained-pool-3-8399ce45-sznw Ready <none> 7m6s v1.27.3-gke.100

node/gk3-wdenniss-ip-constrained-pool-3-8399ce45-xp2w Ready <none> 7m5s v1.27.3-gke.100

node/gk3-wdenniss-ip-constrained-pool-3-8399ce45-xw6v Ready <none> 7m5s v1.27.3-gke.100

node/gk3-wdenniss-ip-constrained-pool-3-bd29cd72-5l9w Ready <none> 7m10s v1.27.3-gke.100

node/gk3-wdenniss-ip-constrained-pool-3-bd29cd72-5mnw Ready <none> 7m5s v1.27.3-gke.100

node/gk3-wdenniss-ip-constrained-pool-3-bd29cd72-5qb6 Ready <none> 7m2s v1.27.3-gke.100

node/gk3-wdenniss-ip-constrained-pool-3-bd29cd72-6m9v Ready <none> 7m8s v1.27.3-gke.100

node/gk3-wdenniss-ip-constrained-pool-3-bd29cd72-cv6s Ready <none> 7m8s v1.27.3-gke.100

node/gk3-wdenniss-ip-constrained-pool-3-bd29cd72-cxvq Ready <none> 7m3s v1.27.3-gke.100

node/gk3-wdenniss-ip-constrained-pool-3-bd29cd72-f9r4 Ready <none> 7m8s v1.27.3-gke.100

node/gk3-wdenniss-ip-constrained-pool-3-bd29cd72-hdkc Ready <none> 7m6s v1.27.3-gke.100

node/gk3-wdenniss-ip-constrained-pool-3-bd29cd72-hpqm Ready <none> 7m5s v1.27.3-gke.100

node/gk3-wdenniss-ip-constrained-pool-3-bd29cd72-j9q2 Ready <none> 7m6s v1.27.3-gke.100

node/gk3-wdenniss-ip-constrained-pool-3-bd29cd72-kzvl Ready <none> 7m6s v1.27.3-gke.100

node/gk3-wdenniss-ip-constrained-pool-3-bd29cd72-ls96 Ready <none> 7m8s v1.27.3-gke.100

node/gk3-wdenniss-ip-constrained-pool-3-bd29cd72-nzs2 Ready <none> 7m12s v1.27.3-gke.100

node/gk3-wdenniss-ip-constrained-pool-3-bd29cd72-slmk Ready <none> 7m6s v1.27.3-gke.100

node/gk3-wdenniss-ip-constrained-pool-3-bd29cd72-v6zn Ready <none> 7m9s v1.27.3-gke.100

node/gk3-wdenniss-ip-constrained-pool-3-bd29cd72-zlnv Ready <none> 7m8s v1.27.3-gke.100

node/gk3-wdenniss-ip-constrained-pool-3-c5a846aa-kdmf Ready <none> 7m2s v1.27.3-gke.100

node/gk3-wdenniss-ip-constrained-pool-3-c5a846aa-pwdn Ready <none> 7m5s v1.27.3-gke.100

node/gk3-wdenniss-ip-constrained-pool-3-c5a846aa-q7xc Ready <none> 7m6s v1.27.3-gke.100

Counting the nodes, and it’s a match (32 nodes plus the heading):

$ kubectl get nodes | wc -l

33

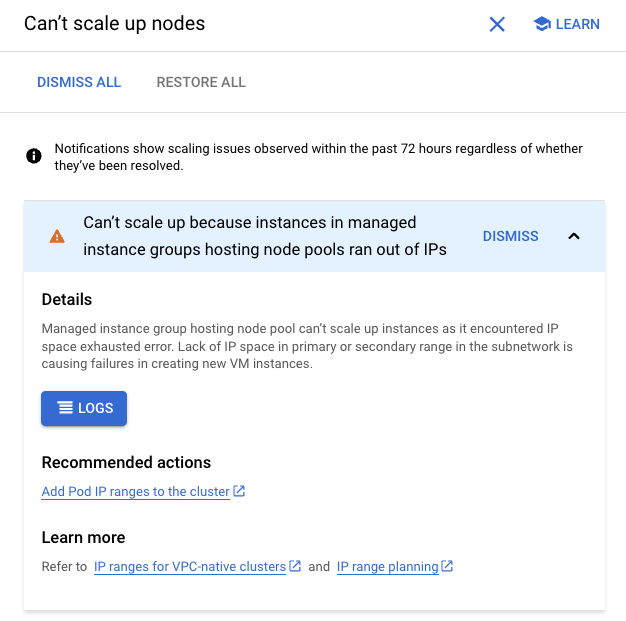

GKE even shows a handy error message indicating the problem pointing to the docs with a solution.

To solve, let’s add another /21 range for another 32 nodes, as follows. I’ll even use a non-RFC1918 range to save IPs from my 10.0.0.0/8 network, which is optional. First we need to add a new secondary range to the subnet assigned to the cluster:

$ SUBNET_NAME=default

$ RANGE_NAME=more-pods

$ gcloud compute networks subnets update $SUBNET_NAME \

--add-secondary-ranges $RANGE_NAME=240.88.0.0/21 \

--region=$REGION

Updated [.../regions/us-central1/subnetworks/default].

Then update the cluster to add this new range

$ gcloud container clusters update $CLUSTER_NAME \

--additional-pod-ipv4-ranges=$RANGE_NAME \

--region=$REGION

Updating wdenniss-ip-constrained...done.

Updated [.../us-central1/clusters/wdenniss-ip-constrained].

This happens quite quickly, and now we can see the new secondary range in the cluster info.

Since this is Autopilot, there’s nothing more to do! The system will reconcile the existence of the new IPs and scale up for us.

TIP this will also work on a node-based cluster. If the cluster has run out of Pod IPs and you add a new range with --additional-pod-ipv4-ranges as I did here, then new node pools you create will utilize this new range.

$ kubectl get nodes | wc -l

65

$ kubectl get deploy

NAME READY UP-TO-DATE AVAILABLE AGE

timeserver 810/1000 1000 810 155m

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

gk3-wdenniss-ip-constrai-nap-1ls7unll-3a1c8982-56xk Ready <none> 43m v1.27.3-gke.100

gk3-wdenniss-ip-constrai-nap-1ls7unll-3a1c8982-824h Ready <none> 43m v1.27.3-gke.100

gk3-wdenniss-ip-constrai-nap-1ls7unll-3a1c8982-8rkj Ready <none> 43m v1.27.3-gke.100

gk3-wdenniss-ip-constrai-nap-1ls7unll-3a1c8982-b55c Ready <none> 43m v1.27.3-gke.100

gk3-wdenniss-ip-constrai-nap-1ls7unll-3a1c8982-n2df Ready <none> 43m v1.27.3-gke.100

gk3-wdenniss-ip-constrai-nap-1ls7unll-3a1c8982-p5gv Ready <none> 43m v1.27.3-gke.100

gk3-wdenniss-ip-constrai-nap-1ls7unll-3a1c8982-rsf6 Ready <none> 43m v1.27.3-gke.100

gk3-wdenniss-ip-constrai-nap-1ls7unll-3a1c8982-vpc6 Ready <none> 43m v1.27.3-gke.100

gk3-wdenniss-ip-constrai-nap-1ls7unll-be52d4c3-6kn6 Ready <none> 43m v1.27.3-gke.100

gk3-wdenniss-ip-constrai-nap-1ls7unll-be52d4c3-9xzf Ready <none> 43m v1.27.3-gke.100

gk3-wdenniss-ip-constrai-nap-1ls7unll-be52d4c3-fcvk Ready <none> 43m v1.27.3-gke.100

gk3-wdenniss-ip-constrai-nap-1ls7unll-be52d4c3-p75q Ready <none> 43m v1.27.3-gke.100

gk3-wdenniss-ip-constrai-nap-1ls7unll-be52d4c3-qc9k Ready <none> 43m v1.27.3-gke.100

gk3-wdenniss-ip-constrai-nap-1ls7unll-be52d4c3-rrk7 Ready <none> 43m v1.27.3-gke.100

gk3-wdenniss-ip-constrai-nap-1ls7unll-be52d4c3-s9b8 Ready <none> 43m v1.27.3-gke.100

gk3-wdenniss-ip-constrai-nap-1ls7unll-d642485b-4dn9 Ready <none> 43m v1.27.3-gke.100

gk3-wdenniss-ip-constrai-nap-1ls7unll-d642485b-929b Ready <none> 43m v1.27.3-gke.100

gk3-wdenniss-ip-constrai-nap-1ls7unll-d642485b-c49t Ready <none> 43m v1.27.3-gke.100

gk3-wdenniss-ip-constrai-nap-1ls7unll-d642485b-ctvf Ready <none> 43m v1.27.3-gke.100

gk3-wdenniss-ip-constrai-nap-1ls7unll-d642485b-mr74 Ready <none> 43m v1.27.3-gke.100

gk3-wdenniss-ip-constrai-nap-1ls7unll-d642485b-rcrm Ready <none> 43m v1.27.3-gke.100

gk3-wdenniss-ip-constrai-nap-1ls7unll-d642485b-s2th Ready <none> 43m v1.27.3-gke.100

gk3-wdenniss-ip-constrai-nap-1ls7unll-d642485b-tr7q Ready <none> 43m v1.27.3-gke.100

gk3-wdenniss-ip-constrai-nap-1ls7unll-d642485b-v8gz Ready <none> 43m v1.27.3-gke.100

gk3-wdenniss-ip-constrai-nap-1ls7unll-d642485b-z7tj Ready <none> 43m v1.27.3-gke.100

gk3-wdenniss-ip-constrai-nap-1ls7unll-eab6680a-792f Ready <none> 43m v1.27.3-gke.100

gk3-wdenniss-ip-constrai-nap-1ls7unll-eab6680a-dw2l Ready <none> 43m v1.27.3-gke.100

gk3-wdenniss-ip-constrai-nap-1ls7unll-eab6680a-r65p Ready <none> 43m v1.27.3-gke.100

gk3-wdenniss-ip-constrai-nap-1ls7unll-eab6680a-rwss Ready <none> 43m v1.27.3-gke.100

gk3-wdenniss-ip-constrai-nap-1ls7unll-eab6680a-v5wt Ready <none> 43m v1.27.3-gke.100

gk3-wdenniss-ip-constrai-nap-1ls7unll-eab6680a-w8tx Ready <none> 43m v1.27.3-gke.100

gk3-wdenniss-ip-constrai-nap-1ls7unll-eab6680a-x8vx Ready <none> 43m v1.27.3-gke.100

gk3-wdenniss-ip-constrained-pool-1-a8ecf6b7-7pfx Ready <none> 132m v1.27.3-gke.100

gk3-wdenniss-ip-constrained-pool-1-a8ecf6b7-rwhb Ready <none> 132m v1.27.3-gke.100

gk3-wdenniss-ip-constrained-pool-3-8399ce45-725z Ready <none> 153m v1.27.3-gke.100

gk3-wdenniss-ip-constrained-pool-3-8399ce45-ftl8 Ready <none> 153m v1.27.3-gke.100

gk3-wdenniss-ip-constrained-pool-3-8399ce45-gk5j Ready <none> 153m v1.27.3-gke.100

gk3-wdenniss-ip-constrained-pool-3-8399ce45-hkds Ready <none> 153m v1.27.3-gke.100

gk3-wdenniss-ip-constrained-pool-3-8399ce45-jmrb Ready <none> 153m v1.27.3-gke.100

gk3-wdenniss-ip-constrained-pool-3-8399ce45-r5vg Ready <none> 153m v1.27.3-gke.100

gk3-wdenniss-ip-constrained-pool-3-8399ce45-r97f Ready <none> 153m v1.27.3-gke.100

gk3-wdenniss-ip-constrained-pool-3-8399ce45-s96h Ready <none> 153m v1.27.3-gke.100

gk3-wdenniss-ip-constrained-pool-3-8399ce45-sznw Ready <none> 153m v1.27.3-gke.100

gk3-wdenniss-ip-constrained-pool-3-8399ce45-xp2w Ready <none> 153m v1.27.3-gke.100

gk3-wdenniss-ip-constrained-pool-3-8399ce45-xw6v Ready <none> 153m v1.27.3-gke.100

gk3-wdenniss-ip-constrained-pool-3-bd29cd72-5l9w Ready <none> 153m v1.27.3-gke.100

gk3-wdenniss-ip-constrained-pool-3-bd29cd72-5mnw Ready <none> 153m v1.27.3-gke.100

gk3-wdenniss-ip-constrained-pool-3-bd29cd72-5qb6 Ready <none> 153m v1.27.3-gke.100

gk3-wdenniss-ip-constrained-pool-3-bd29cd72-6m9v Ready <none> 153m v1.27.3-gke.100

gk3-wdenniss-ip-constrained-pool-3-bd29cd72-cv6s Ready <none> 153m v1.27.3-gke.100

gk3-wdenniss-ip-constrained-pool-3-bd29cd72-cxvq Ready <none> 153m v1.27.3-gke.100

gk3-wdenniss-ip-constrained-pool-3-bd29cd72-f9r4 Ready <none> 153m v1.27.3-gke.100

gk3-wdenniss-ip-constrained-pool-3-bd29cd72-hdkc Ready <none> 153m v1.27.3-gke.100

gk3-wdenniss-ip-constrained-pool-3-bd29cd72-hpqm Ready <none> 153m v1.27.3-gke.100

gk3-wdenniss-ip-constrained-pool-3-bd29cd72-j9q2 Ready <none> 153m v1.27.3-gke.100

gk3-wdenniss-ip-constrained-pool-3-bd29cd72-kzvl Ready <none> 153m v1.27.3-gke.100

gk3-wdenniss-ip-constrained-pool-3-bd29cd72-ls96 Ready <none> 153m v1.27.3-gke.100

gk3-wdenniss-ip-constrained-pool-3-bd29cd72-nzs2 Ready <none> 153m v1.27.3-gke.100

gk3-wdenniss-ip-constrained-pool-3-bd29cd72-slmk Ready <none> 153m v1.27.3-gke.100

gk3-wdenniss-ip-constrained-pool-3-bd29cd72-v6zn Ready <none> 153m v1.27.3-gke.100

gk3-wdenniss-ip-constrained-pool-3-bd29cd72-zlnv Ready <none> 153m v1.27.3-gke.100

gk3-wdenniss-ip-constrained-pool-3-c5a846aa-kdmf Ready <none> 153m v1.27.3-gke.100

gk3-wdenniss-ip-constrained-pool-3-c5a846aa-pwdn Ready <none> 153m v1.27.3-gke.100

gk3-wdenniss-ip-constrained-pool-3-c5a846aa-q7xc Ready <none> 153m v1.27.3-gke.100

Under the hood, GKE can only assign a single Pod CIDR to the node pool. Fortunately in Autopilot, this detail is taken care of for us. I got another 32 nodes, and will need to repeat the steps above to add one more range to fulfil the 1000 replica request (or, I could have added more than a /21 the first time round.

Hopefully you can see that with the ability to add additional Pod ranges, you can now start with a conservative Pod IP allocation and expand as needed. I wouldn’t really recommend starting as low as /21, but it’s an option.

Clean up

If you tried this yourself, to cleanup just delete the deployment. If you’re using Autopilot you won’t need to worry about nodes provisioned under the hood, as the charges stop once the Pods are deleted.

$ kubectl delete deploy/timeserver

And the cluster..

$ gcloud container clusters delete $CLUSTER_NAME \

--region $REGION

1 comment

Comments are closed.