Ahmet’s blog Did we market Knative wrong? got me interested to try out Knative again. I’ll confess that I found the original Istio requirement a bit of a heavy one just to run serverless, and it looked like the project has matured a lot since the early days. Version 1.0.0 was also just released, so it seems like pretty good timing to give it a go.

Naturally, I wanted to see if it worked on Autopilot. Autopilot is a “nodeless” Kubernetes platform, and Knative offers a serverless layer for Kubernetes, so in theory this can be a pretty good match. I was a bit worried that Knative might require root access to nodes, and thus but up against some Autopilot restrictions. The good news is that despite some complications, it does work!

I’m going to share a simpler guide once some of the underlying bugs have been fixed. For now, if you’re interested, here’s my testing log:

Here’s my installation steps, taken from the Knative guide. These 5 commands install the CRDs and Serving components, install and configure Kourier for networking, and setup automatic DNS names (derived from the LoadBalancer IP) using sslip.io

kubectl apply -f https://github.com/knative/serving/releases/download/knative-v1.0.0/serving-crds.yaml

kubectl apply -f https://github.com/knative/serving/releases/download/knative-v1.0.0/serving-core.yaml

kubectl apply -f https://github.com/knative/net-kourier/releases/download/knative-v1.0.0/kourier.yaml

kubectl patch configmap/config-network \

--namespace knative-serving \

--type merge \

--patch '{"data":{"ingress-class":"kourier.ingress.networking.knative.dev"}}'

kubectl apply -f https://github.com/knative/serving/releases/download/knative-v1.0.0/serving-default-domain.yamlThis appears to work–there are no errors emitted during this process, however on closer inspection some of the Deployments have not come up. On Autopilot, it can take about 90 seconds for Pods to be provisioned, but in this case there are not even pending pods for those deployments, which is a bad sign.

$ kubectl get deploy

NAME READY UP-TO-DATE AVAILABLE AGE

activator 0/1 0 0 2m36s

autoscaler 0/1 0 0 2m35s

controller 1/1 1 1 2m35s

domain-mapping 1/1 1 1 2m34s

domainmapping-webhook 0/1 0 0 2m34s

net-kourier-controller 1/1 1 1 2m31s

webhook 0/1 0 0 2m34sLook at our event log, I we can see that there is an error from one of the replica sets managed by the Deployment

$ kubectl get events -w

1s Warning FailedCreate replicaset/webhook-5dd8498b96 Error creating: admission webhook "policycontrollerv2.common-webhooks.networking.gke.io" denied the request: GKE Policy Controller rejected the request because it violates one or more policies: {"[denied by autogke-node-affinity-selector-limitation]":["Auto GKE disallows use of cluster-autoscaler.kubernetes.io/safe-to-evict=false annotation on workloads"]}The issue here is the cluster-autoscaler.kubernetes.io/safe-to-evict annotation that’s being rejected by Autopilot. The reason for this is that this annotation prevents the autoscaler from removing pods from the node, something that users don’t have control over in a “nodeless” system like Autopilot.

Fortunately this is pretty easy to fix, we can patch the 4 deployments that didn’t scale up to remove the offending annotation:

kubectl patch -n knative-serving deployment activator --type=json -p='[{"op":"remove","path":"/spec/template/metadata/annotations/cluster-autoscaler.kubernetes.io~1safe-to-evict"}]'

kubectl patch -n knative-serving deployment autoscaler --type=json -p='[{"op":"remove","path":"/spec/template/metadata/annotations/cluster-autoscaler.kubernetes.io~1safe-to-evict"}]'

kubectl patch -n knative-serving deployment domainmapping-webhook --type=json -p='[{"op":"remove","path":"/spec/template/metadata/annotations/cluster-autoscaler.kubernetes.io~1safe-to-evict"}]'

kubectl patch -n knative-serving deployment webhook --type=json -p='[{"op":"remove","path":"/spec/template/metadata/annotations/cluster-autoscaler.kubernetes.io~1safe-to-evict"}]'Now when we run the deployment, everything looks good!

$ kubectl get deploy -n knative-serving

NAME READY UP-TO-DATE AVAILABLE AGE

activator 1/1 1 1 22m

autoscaler 1/1 1 1 21m

controller 1/1 1 1 21m

domain-mapping 1/1 1 1 21m

domainmapping-webhook 1/1 1 1 21m

net-kourier-controller 1/1 1 1 21m

webhook 1/1 1 1 21mLet’s try the getting started sample! Aside: if you need to install the kn CLI on CloudShell, check this guide.

$ kn service create hello \

> --image gcr.io/knative-samples/helloworld-go \

> --port 8080 \

> --env TARGET=World \

> --revision-name=world

Creating service 'hello' in namespace 'knative-serving':

0.089s The Configuration is still working to reflect the latest desired specification.

0.269s The Route is still working to reflect the latest desired specification.

0.337s Configuration "hello" is waiting for a Revision to become ready.

2.515s Revision "hello-world" failed with message: 0/7 nodes are available: 7 Insufficient cpu, 7 Insufficient memory..

2.604s Configuration "hello" does not have any ready Revision.

Error: RevisionFailed: Revision "hello-world" failed with message: 0/7 nodes are available: 7 Insufficient cpu, 7 Insufficient memory..

Run 'kn --help' for usageThere’s an error there, but it looks like the Knative service was created correctly:

$ kn service list

NAME URL LATEST AGE CONDITIONS READY REASON

hello http://hello.knative-serving.34.127.99.151.sslip.io hello-world 6m48s 3 OK / 3 TrueLet’s look at the Pods:

$ kubectl get pods -w

NAME READY STATUS RESTARTS AGE

hello-world-deployment-7457c6557c-t85jw 0/2 Terminating 0 8s

hello-world-deployment-7457c6557c-t85jw 0/2 Terminating 0 8s

hello-world-deployment-7cc47879b8-p7ng2 0/2 Pending 0 0s

hello-world-deployment-7cc47879b8-p7ng2 0/2 Pending 0 0s

hello-world-deployment-6647bd5575-5tw74 0/2 Terminating 0 8s

hello-world-deployment-6647bd5575-5tw74 0/2 Terminating 0 8s

hello-world-deployment-57f7b85569-n6vcc 0/2 Pending 0 0s

hello-world-deployment-57f7b85569-n6vcc 0/2 Pending 0 0s

hello-world-deployment-7cc47879b8-p7ng2 0/2 Terminating 0 6sThere is a LOT of churn here. Pods for my Knative service become Pending, but then get Terminated a few seconds later. At a guess, Knative isn’t giving Autopilot a chance here to scale up the compute capacity to handle these pods, once it sees that they are “Pending” it just removes them. That’s no good (bug filed).

How to solve this? The answer is balloon pods. Balloon pods are a way to provision spare capacity in Autopilot. To set them up we need to know what size of the pods that are getting created. Inspecting one of our churned Knative pods from above, we see:

$ kubectl describe pod hello-world-deployment-7f96f5bf74-c65d5

Requests:

cpu: 725m

ephemeral-storage: 1Gi

memory: 26843546I’m going to create a balloon pod here with a single deployment matching that Pod, so there’s just enough spare capacity to run it:

knative-hello-world-balloon.yaml

apiVersion: scheduling.k8s.io/v1

kind: PriorityClass

metadata:

name: balloon-priority

value: -10

preemptionPolicy: Never

globalDefault: false

description: "Balloon pod priority."

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: balloon

spec:

replicas: 1

selector:

matchLabels:

app: balloon-pod

template:

metadata:

labels:

app: balloon-pod

spec:

priorityClassName: balloon-priority

terminationGracePeriodSeconds: 0

containers:

- name: ubuntu

image: ubuntu

command: ["sleep"]

args: ["infinity"]

resources:

requests:

cpu: 725m

memory: 750MiAside: here’s a summary of all the installation steps. Once the balloon pod is running, we can try again.

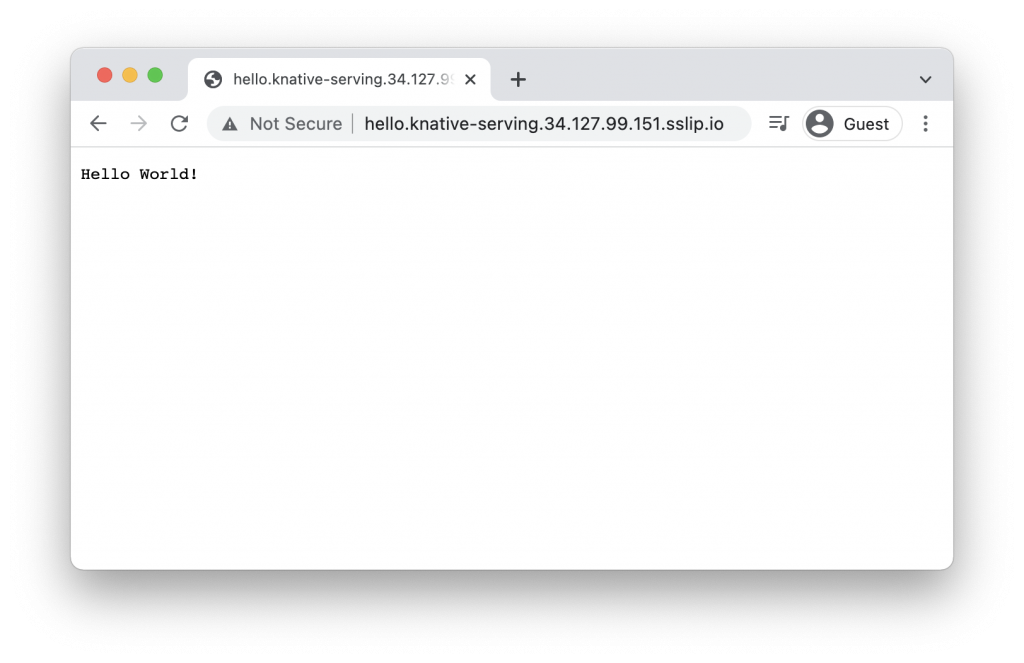

Visiting the URL from kn services list once again, we can see that it now works!

Looking at the Pods, this time we see that our Knative service pods get to the ContainerCreating then Running stages:

$ kubectl get pods -w

hello-world-deployment-5d69cc665d-fcpbl 0/2 Pending 0 0s

hello-world-deployment-5d69cc665d-fcpbl 0/2 Pending 0 0s

hello-world-deployment-5d69cc665d-fcpbl 0/2 ContainerCreating 0 1s

hello-world-deployment-5cc599447d-zlb6q 0/2 Pending 0 0s

hello-world-deployment-5cc599447d-zlb6q 0/2 Pending 0 0s

hello-world-deployment-5cc599447d-zlb6q 0/2 ContainerCreating 0 0s

hello-world-deployment-5d69cc665d-fcpbl 1/2 Running 0 18s

hello-world-deployment-5d69cc665d-fcpbl 2/2 Running 0 19sWhat’s next? While Knative 1.0.0 can be made to run on Autopilot, there’s room for improvement. Removing the churn of Pending pods for example would help, and avoid the need for a Balloon Pod (it would mean that the first request to a service might take a minute as it scales up, so Balloon Pods could still be utilized to speed that up, they just wouldn’t be required). Bugs aside, I think this is a pretty nice pairing of serverless Kubernetes on a nodeless Kubernetes platform!