Autopilot is now available in GKE clusters via Compute Class. See the official announcement, and documentation. Versions with this feature are now available for clusters in the Regular release channel. Here’s how to upgrade early and get these features today. Per the docs, the feature is in version 1.34.1-gke.1829001 or later. To upgrade we need… Continue reading Upgrading a GKE cluster to use Autopilot Compute Class

Category: GKE

Using C4A and C4D with Compute Class

I’ve been following Arm for a while, so was glad that the C4A VM was GA’d earlier this year. These machines run Google silicon Axion chip. It’s also great to see broad geographical availability including regions like Tokyo. Feels like Arm is finally ready for prime time. C4D running 5th Gen AMD EPYC processors (Turin)… Continue reading Using C4A and C4D with Compute Class

Simulate a zonal failure on Autopilot

GKE’s Autopilot mode is designed to be safer to operate and prevents many issues that can lead to sudden outages like misconfigured firewalls. However, if you’re trying to actually simulate a failure like when performing a zonal failure simulation, Autopilot’s safeguards can sometimes get in the way, blocking some of the potential ways to run… Continue reading Simulate a zonal failure on Autopilot

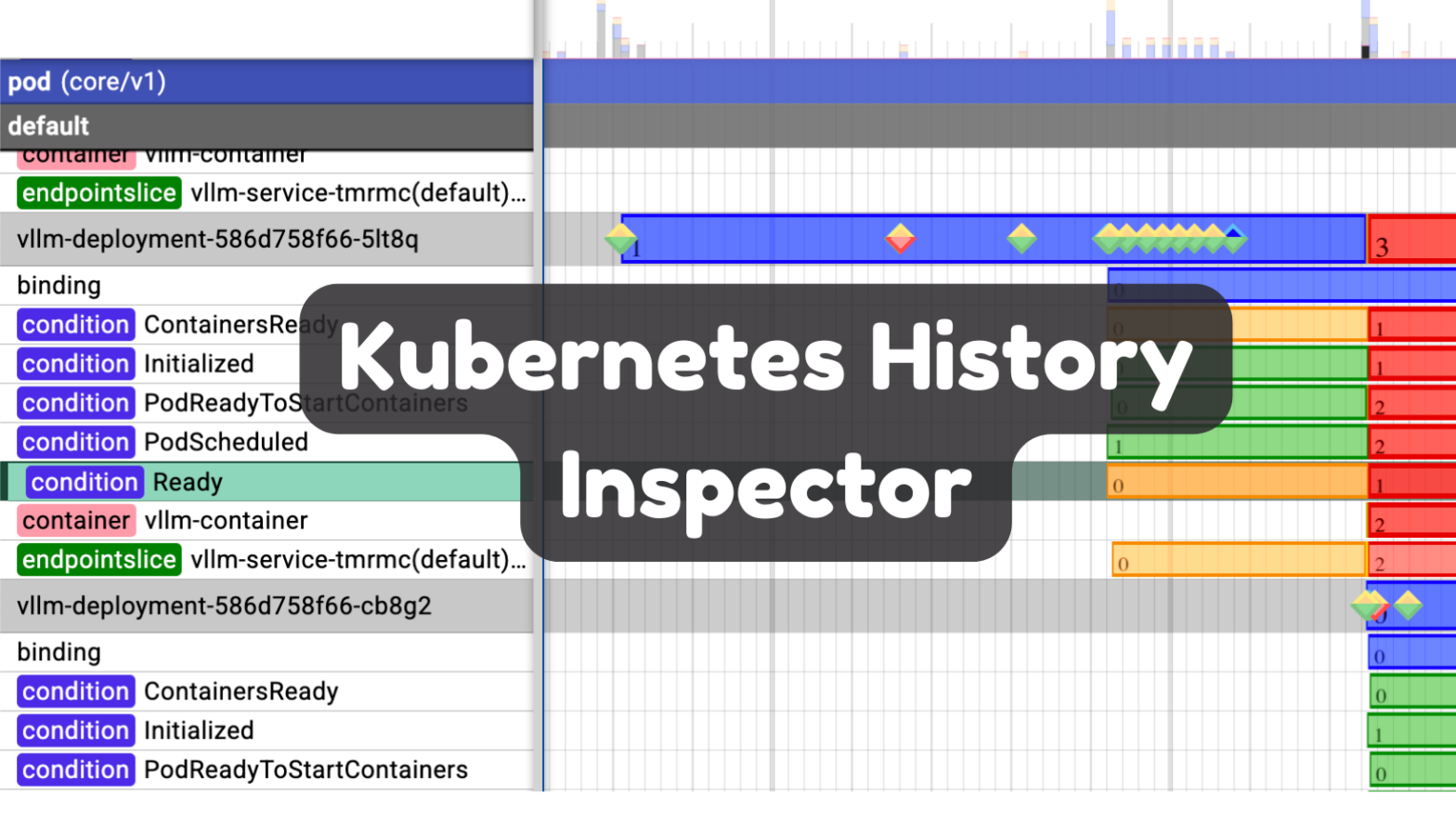

Kubernetes History Inspector

GKE users get access to an awesome new tool this week: the Kubernetes History Inspector. This product, released as open source, parses Kubernetes and GKE logs to generate a timeline with all events in the cluster. Kubernetes is a complicated system with multiple objects, and various automated processes. A single Deployment results in at least… Continue reading Kubernetes History Inspector

Using Node-based pricing on GKE Autopilot

New this year, Autopilot now has two pricing models: the original Pod-based model, and the new node-based option. The pricing page does a pretty good job of explaining the difference (at least I hope it explains it well, as I wrote it), and how best to utilize each option, but here’s a quick recap anyway:… Continue reading Using Node-based pricing on GKE Autopilot

Serving Stable Diffusion with RayServe on GKE Autopilot

Now that you’ve tried out Stable Diffusion on GKE Autopilot via the WebUI, you might be wondering how you’d go about adding stable diffusion as a proper micro-service that other components of your application can call. One popular way is via Ray. Let’s try this tutorial: Serve a StableDiffusion text-to-image model on Kubernetes, on GKE… Continue reading Serving Stable Diffusion with RayServe on GKE Autopilot

Stable Diffusion WebUI on GKE Autopilot

I recently set out to run Stable Diffusion on GKE in Autopilot mode, building a container from scratch using the AUTOMATIC1111‘s webui. This is likely not how you’d host a stable diffusion service for production (which would make for a good topic of another blog post), but it’s a fun way to try out the… Continue reading Stable Diffusion WebUI on GKE Autopilot

LLM Model Serving on Autopilot

When building your business using LLMs as a key component, you may wish to be a master of your own domain and run your own model. Running your own LLM protects you from changes like pricing increases or API availability with third-party services, guarantees the privacy of your data (no data needs to leave your… Continue reading LLM Model Serving on Autopilot

Cordoning nodes in GKE Autopilot

Occasionally you may wish to remove and replace a node in GKE Autopilot. That can be done with If you want to immediate drain the Pods and put them in the pending state, you can further drain the node: What about if you need to do all nodes? Be aware that this is pretty disruptive,… Continue reading Cordoning nodes in GKE Autopilot

CUDA 12 on GKE Autopilot

Per the NVIDIA docs, CUDA 12 applications require driver 525.60.04+. This driver is available as part of GKE 1.28. To upgrade an existing cluster to the latest version of 1.28: This upgrades the control plane, and schedules the nodes to follow, which generally completes within a day or two (depending on how many nodes you… Continue reading CUDA 12 on GKE Autopilot

Using Image Streaming with DockerHub

Image streaming is a really great way to speed up workload scaling on GKE. Take for example the deep learning image from Google. In my testing, the container is created in just 20s, instead of 3m50s. While there is slightly higher latency on reads while the image streams, the 3m30s head start is going to… Continue reading Using Image Streaming with DockerHub

Finding the NVIDIA Driver Version on GKE

Update: this information is now available in the official docs. If you want to know what version of your GPU drivers are active on GKE, here’s a one-liner: What this command does is get all the logs of Pods with the label k8s-app=nvidia-gpu-device-plugin (there are several different DaemonSets that can install the drivers depending on… Continue reading Finding the NVIDIA Driver Version on GKE

Editing IP Masquerading rules for GKE Autopilot

IPs are masqueraded by default in Autopilot to use the node IP for egress traffic. which is just a fancy way of saying, the Pod traffic looks like it comes from the node’s IP. This is handy when using non-RFC1918 ranges in GKE to avoid IP exhaustion, since your Node IP range is typically in… Continue reading Editing IP Masquerading rules for GKE Autopilot

GKE Network Planning (2023)

GKE operates on a flat VPC structure. That means that every Node and Pod has an identity within your VPC, and their IPs are not re-used. This is convenient, as Pods are addressable within the VPC, but unless you create multiple VPCs to isolate resources, you can end up using a lot of IPs very… Continue reading GKE Network Planning (2023)

Adding Pod IP ranges to GKE clusters

Did you know that you can now add Pod IP ranges to GKE clusters? Pods use a lot of IPs, which in the past forced you to compromise. Do you allocate a lot of IPs to the cluster allowing for growth while reserving a big group of IPs, or do you allocate just a little… Continue reading Adding Pod IP ranges to GKE clusters

BYO Service Mesh on GKE Autopilot

Did you know you can now run any service mesh on Autopilot? That’s right! Even though Autopilot is a fully managed Kubernetes platform which by nature means you don’t have full root access to the node, that doesn’t mean you’re limited in what service mesh you can install. How does it work? Service Meshes require… Continue reading BYO Service Mesh on GKE Autopilot

Multi-Cluster Services on GKE

Connect internal services from multiple clusters together in one logical namespace. Easily connect services running in Autopilot to Standard and vice versa, share services between teams running on their own services, and back an internal service by replicas in multiple clusters for cross-regional availability. All with the Multi-cluster Service support in GKE. For this demo,… Continue reading Multi-Cluster Services on GKE

Strict Pod Co-location

Pod affinity is a useful technique in Kubernetes for expressing a requirement that a pod, say with the “reader” role, is co-located with another pod, say with the “writer’ role. You can express that requirement by adding something like the following to the reader pod. The catch is, if there is no space on the… Continue reading Strict Pod Co-location

Reducing GKE Log Ingestion

[Update (2023-12-20): You can now turn off workload logging in Autopilot. That is the recommended approach if you want to remove all workload logs. To disable workload logs for Autopilot (for example, if you use a third-party logging agent like DataDog), pass this value at cluster creation: Or update The remainder of this blog post… Continue reading Reducing GKE Log Ingestion

HA 3-zone Deployments with PodSpreadTopology on Autopilot

PodSpreadTopology is a way to get Kubernetes to spread out your pods across a failure domain, typically nodes or zones. Kubernetes platforms typically have some default spread built in, although it may not be as aggressive as you want (meaning, it might be more tolerant of imbalanced spread). Here’s an example Deployment with a PodSpreadToplogy… Continue reading HA 3-zone Deployments with PodSpreadTopology on Autopilot

SSD Ephemeral Storage on GKE (including Autopilot)

Do you need to provision a whole bunch of ephemeral storage to your Autopilot Pods? For example, as part of a data processing pipeline? In the past with Kubernetes, you might have used emptyDir as a way to allocate a bunch of storage (taken from the node’s boot disk) to your containers. This however requires… Continue reading SSD Ephemeral Storage on GKE (including Autopilot)

TensorFlow on GKE Autopilot with GPU acceleration

Last week, GKE announced GPU support for Autopilot. Here’s a fun way to try it out: a TensorFlow-enabled Jupyter Notebook with GPU-acceleration! We can even add state, so you can save your work between sessions. Autopilot makes all this really, really easy, as you can configure everything as a Kubernetes object. Update: this post is… Continue reading TensorFlow on GKE Autopilot with GPU acceleration

Migrating an IP-based service in GKE

Let’s say you want to migrate a service in GKE from one cluster to another (including between Standard and Autopilot clusters), and keep the same external IP while you do. DNS might be the ideal way to update your service address, for whatever reason you need to keep the IP the same. Fortunately, it is… Continue reading Migrating an IP-based service in GKE

Running GKE Autopilot at Scale

If you like what you see with Autopilot (now the recommended way to use GKE), and want to run it at scale, there are a few things to know when setting up the cluster, and scaling your workload. Cluster Creation To run a large Autopilot cluster, it’s advisable to do two things: 1) create the… Continue reading Running GKE Autopilot at Scale

Provisioning one-off spare capacity for GKE Autopilot

I previously documented how to add spare capacity to an Autopilot Kubernetes cluster, whereby you create a placeholder Deployment to provision some scheduling headroom. This works to constantly give you a certain amount of headroom, so for example if you have a 2vCPU placeholder (a.k.a. balloon) Deployment, and use that capacity it will get rescheduled.… Continue reading Provisioning one-off spare capacity for GKE Autopilot

High-Performance Compute on Autopilot

This week, Autopilot announced support for the Scale-Out Compute Class, for both x86 and Arm architectures. The point of this compute class is to give you cores for better single-threaded performance, and improved price/performance for “scale-out” workloads — basically for when you are saturating the CPU, and/or need faster single-threaded performance (e.g. remote compilation, etc).… Continue reading High-Performance Compute on Autopilot

Building Arm Images with Cloud Build

This week’s big news in Google Cloud was the addition to Arm across a wide range of products, including GCE VMs, and GKE (both Standard and Autopilot). In an earlier post, I covered how to get an Arm-ready Autopilot cluster on day 1. The recommended way to build images for Arm is with buildx. This… Continue reading Building Arm Images with Cloud Build

Arm on Autopilot

Arm was made available in Preview on Google Cloud, and GKE Autopilot today! As this is an early stage Preview, there’s a few details to pay attention to if you want to try it out, like the version, regions and quota. I put together this quickstart for trying out Arm in Autopilot today. Arm nodes… Continue reading Arm on Autopilot

Minimizing Pod Disruption on Autopilot

There are 3 common reasons why a Pod may be terminated on Autopilot: node upgrades, a cluster scale-down, and a node repair. PDBs and graceful termination periods modify the disruption to pods when these events happen, and maintenance windows and exclusions control when upgrade events can occur. Upgrade gracefulTerminationPeriod: limited to one hourPDB: is respected… Continue reading Minimizing Pod Disruption on Autopilot

Building GKE Autopilot

Last month gave a presentation at KubeCon Europe in Valencia on “Building a Nodeless Kubernetes Platform”. In it, I shared the details about the creation of GKE Autopilot including some key decisions that we made, how the product was implemented, and why I believe that the design leads to an ideal fully managed platform. Autopilot… Continue reading Building GKE Autopilot

Preferring Spot in GKE Autopilot

Spot Pods are a great way to save money on Autopilot, currently 70% off the regular price. The catch is two-fold: Your workload can be disrupted There may not always be spot capacity available For workload disruption, this is simply a judgement call. You should only run workloads that can accept disruption (abrupt termination). If… Continue reading Preferring Spot in GKE Autopilot

Separating Workloads in Autopilot

Autopilot while being operationally nodeless, still creates nodes for your workloads behind the scenes. Sometimes it may be desirable as an operator to separate your workloads so that certain workloads are scheduled on their separates nodes, a technique known as workload separation. One example I heard recently was a cluster that primarily processes large batch… Continue reading Separating Workloads in Autopilot

Kubernetes Nodes and Autopilot

One of the key design decisions of GKE Autopilot is the fact that we kept the same semantic meaning of the Kubernetes node object. It’s “nodeless” in the sense that you don’t need to care about, or plan for nodes—they are provisioned and managed automatically based on your PodSpec. However, the node object still exists… Continue reading Kubernetes Nodes and Autopilot

Creating an Autopilot cluster at a specific version

Sometimes you may wish to create or update a GKE Autopilot cluster with a specific version. For example, the big news this week is that mutating webhooks are supported in Autopilot (from version 1.21.3-gke.900). Rather than waiting for your desired version to be the default in your cluster’s release channel, you can update ahead of… Continue reading Creating an Autopilot cluster at a specific version

Choosing the right network size for Autopilot

Update (2023-09): Autopilot now supports the ability to add additional Pod IP ranges, so you no longer need to plan this up front. Read the official docs, and my test run. One of the most important decisions you can make for your Autopilot cluster is selecting the right network size. Too small and you’ll constraint… Continue reading Choosing the right network size for Autopilot

Using GKE Autopilot in specific zones

GKE Autopilot is deployed using the regional cluster architecture. This has a number of advantages such as giving you 3 master nodes for high availability of the control plane, and the ability to spread pods among zones for high availability of your workloads. But sometimes this may be more than what you need, and zonal… Continue reading Using GKE Autopilot in specific zones