I recently set out to run Stable Diffusion on GKE in Autopilot mode, building a container from scratch using the AUTOMATIC1111‘s webui. This is likely not how you’d host a stable diffusion service for production (which would make for a good topic of another blog post), but it’s a fun way to try out the tech.

My first key learning was to start with a Google Deep Learning Container which provides a useful base image with CUDA 12 support and is based on Debian. This provides the perfect operating environment for running a CUDA 12 application on Google Cloud. I first attempted to create my own image using Debian, but Stable Diffusion couldn’t find the GPU—better just to use the Google ones that are preconfigured with everything I need!

Next I had to design the actual container. At first I build a derivative image which would install as much of Stable Diffusion as possible at build time. It turned out that this didn’t help much; while I could clone the repo and install a few dependencies, there was still a ton of setup happening at runtime. The webui repo is basically not setup for container-native builds, and creating one was out of scope for my test. My second attempt was a simple derivative container that just installed a couple of needed Linux packages before kicking off the webui script. Finally I decided that hassle of the derivative container (needing to build and upload my own multi-gig container) wasn’t worth the payoff, I could simply run those couple of steps at runtime along with the rest of the Stable Diffusion webui. So my final design was to use the base image, and load in a script to configure Debian then kick-off the web-ui script. That design is presented here.

The build

As usual, we create an Autopilot cluster with just 3 pieces of information: the name, version and region. You’ll need to use version 1.28 with NVIDIA L4 support, and a region with L4 GPUs.

CLUSTER_NAME=stable-diffusion

VERSION="1.28"

REGION=us-central1

gcloud container clusters create-auto $CLUSTER_NAME \

--region $REGION --release-channel rapid \

--cluster-version $VERSION

To configure Stable Diffusion I’m going to add 2 bash scripts into the base container: run.sh with my setup steps, and webui-user.sh with the webui settings. The run.sh setup does the following:

- Installs Debian dependencies required by Stable Diffusion

- Clones the Stable Diffusion webui repo

- Copies in the

webui-user.shfile - Downloads some models from civitai

- Runs the Stable Diffusion webui run script, which will configure then launch the webui

These are similar steps that you would run if you were doing this locally. Again, this isn’t particularly container-native, but creating a production-grade stable diffusion container wasn’t in scope for my demo.

Here’s what those files look like, as encapsulated in a ConfigMap

apiVersion: v1

kind: ConfigMap

metadata:

name: stable-diffusion-config

data:

run.sh: |

#! /bin/bash

echo "Dependencies ---------------------------------------------------"

# Install dependencies

apt-get update

# required dependencies of stable diffusion

apt-get install -y wget git libgl1 libglib2.0-0 google-perftools

# install other debugging tools

apt-get install -y vim

# Clone stable diiffusion webui

cd /app/data

git clone https://github.com/AUTOMATIC1111/stable-diffusion-webui

cd stable-diffusion-webui

# Copy the stable diffusion webui user config

cp /app/config/webui-user.sh .

echo "Models ---------------------------------------------------------"

# Download some stable diffusion models on first run

declare -A models

declare -A titles

titles["v1-5-pruned.ckpt"]="Stable Diffusion 1.5"

models["v1-5-pruned.ckpt"]="https://huggingface.co/runwayml/stable-diffusion-v1-5/resolve/main/v1-5-pruned.ckpt"

titles["Protogen-V22-Anime.safetensors"]="Protogen v2.2 (Anime)"

models["Protogen-V22-Anime.safetensors"]="https://civitai.com/api/download/models/4007"

titles["DreamShaper.safetensors"]="DreamShaper"

models["DreamShaper.safetensors"]="https://civitai.com/api/download/models/128713?type=Model&format=SafeTensor&size=pruned&fp=fp16"

titles["A-Zovya-RPG.safetensors"]="A-Zovya RPG Artist Tools"

models["A-Zovya-RPG.safetensors"]="https://civitai.com/api/download/models/79290"

titles["Realistic-Vision-V6-0-B1.safetensors"]="Realistic Vision V6.0 B1"

models["Realistic-Vision-V6-0-B1.safetensors"]="https://civitai.com/api/download/models/245598?type=Model&format=SafeTensor&size=full&fp=fp16"

titles["icbinpICantBelieveIts_lcm.safetensors"]="ICBINP - I Can't Believe It's Not Photography"

models["icbinpICantBelieveIts_lcm.safetensors"]="https://civitai.com/api/download/models/253668?type=Model&format=SafeTensor&size=pruned&fp=fp16"

echo "Downloading models..."

cd models/Stable-diffusion

for key in "${!models[@]}"; do

if [ ! -f $key ]; then

echo "Downloading ${titles[$key]}"

curl -L "${models[$key]}" > $key

else

echo "Model ${titles[$key]} ($key) exists, skipping"

fi

done

cd ../../

echo "Stable Diffusion ----------------------------------------------"

# Run the setup & boot

# Note: This container runs as the root user, `-f` is needed to run as root

./webui.sh -f

webui-user.sh: |

#!/bin/bash

#########################################################

# Uncomment and change the variables below to your need:#

#########################################################

# Install directory without trailing slash

#install_dir="/home/$(whoami)"

# Name of the subdirectory

#clone_dir="stable-diffusion-webui"

# Commandline arguments for webui.py, for example: export COMMANDLINE_ARGS="--medvram --opt-split-attention"

export COMMANDLINE_ARGS="--xformers --listen"

#export COMMANDLINE_ARGS="--xformers --share --listen"

# python3 executable

#python_cmd="python3"

# git executable

#export GIT="git"

# python3 venv without trailing slash (defaults to ${install_dir}/${clone_dir}/venv)

#venv_dir="venv"

# script to launch to start the app

#export LAUNCH_SCRIPT="launch.py"

# install command for torch

#export TORCH_COMMAND="pip install torch==1.12.1+cu113 --extra-index-url https://download.pytorch.org/whl/cu113"

# Requirements file to use for stable-diffusion-webui

#export REQS_FILE="requirements_versions.txt"

# Fixed git repos

#export K_DIFFUSION_PACKAGE=""

#export GFPGAN_PACKAGE=""

# Fixed git commits

#export STABLE_DIFFUSION_COMMIT_HASH=""

#export CODEFORMER_COMMIT_HASH=""

#export BLIP_COMMIT_HASH=""

# Uncomment to enable accelerated launch

#export ACCELERATE="True"

# Uncomment to disable TCMalloc

#export NO_TCMALLOC="True"

###########################################

Then, I deploy my StatefulSet which references the deep learning container and mounts those 2 files to /app/config. The run command is pointed at my run.sh script from the ConfigMap.

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: stable-diffusion

spec:

selector:

matchLabels:

pod: sd

serviceName: sd

replicas: 1

template:

metadata:

labels:

pod: sd

spec:

nodeSelector:

cloud.google.com/gke-accelerator: nvidia-l4

cloud.google.com/gke-spot: "true"

terminationGracePeriodSeconds: 25

containers:

- name: cu113-py310-container

image: us-docker.pkg.dev/deeplearning-platform-release/gcr.io/base-cu113.py310

command: ["/app/config/run.sh"]

resources:

requests:

ephemeral-storage: 10Gi

memory: 26Gi

limits:

nvidia.com/gpu: "1"

volumeMounts:

- mountPath: /app/data

name: sd-pvc

- mountPath: /app/config/

name: config

volumes:

# configmap with the 2 configuration files

- name: config

configMap:

name: stable-diffusion-config

defaultMode: 0777

volumeClaimTemplates:

- metadata:

name: sd-pvc

spec:

accessModes:

- ReadWriteOnce

storageClassName: "premium-rwo"

resources:

requests:

storage: 200Gi

---

# Headless service for the above StatefulSet

apiVersion: v1

kind: Service

metadata:

name: sd

spec:

ports:

- port: 7860

clusterIP: None

selector:

pod: sd

stable-diffusion-statefulset.yaml

To run this demo yourself, here are the steps.

1. Clone the repo

git clone https://github.com/WilliamDenniss/autopilot-examples.git

cd autopilot-examples/stable-diffusion

2. To deploy, create both files.

kubectl create -f stable-diffusion-config.yaml

kubectl create -f stable-diffusion-statefulset.yaml

3. Watch the rollout

watch -d kubectl get pods,nodes

When the Pod shows Running, you’re not done as there’s still a lot of runtime setup before the application is ready. Follow the boot progress like so:

kubectl logs -l pod=sd --tail=-1 -f

(the components of that command are -l pod=sd selects all Pods with the label pod=sd, --tail=-1 outputs all the logs from the start of the container run, and -f follows the logs to print new messages)

It will take a bit of time to setup everything and download the models. Look for the logs

Running on local URL: http://0.0.0.0:7860

To access, there’s a couple of ways. The most private is to forward a port locally like so:

kubectl port-forward sts/stable-diffusion 7860:7860

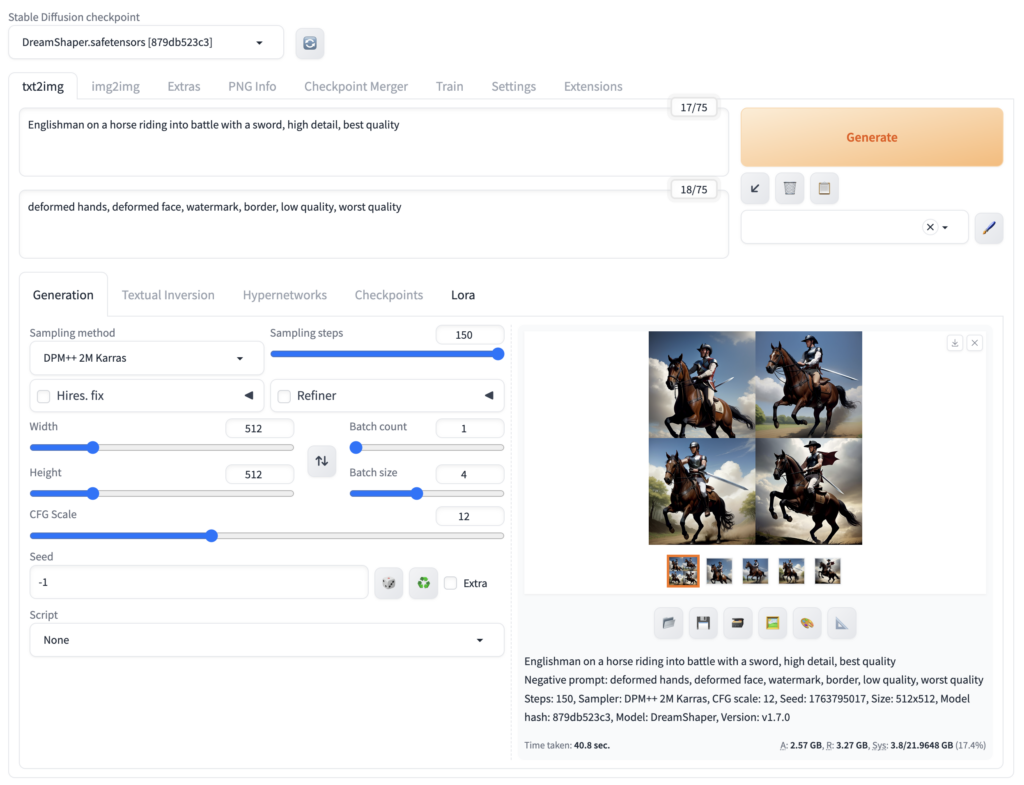

Finally, you can access the service on your computer at http://localhost:7860 (see below for other sharing options). The UI looks like this:

Diffusing

Now that the UI is serving, we can get to work. Pick a model from the dropdown. Enter some positive prompts for what you want to see, negative prompts for what you don’t want and give it a try. Look online for some examples, as very basic prompts of just a couple of words don’t always look amazing.

Here’s one of the first images I got out of Stable Diffusion on GKE Autopilot using the dreamshaper checkpoints. Pretty happy with the result! Prompt: “englishman on a horse riding into battle with a sword”, negative prompt: “deformed hands, deformed face”

Many image genAI systems give you 1 or 4 images to choose from each time. The nice thing about running it yourself like this is when you find a prompt that works, you can turn up the batch count and generate 10s or even 100s of variants so you can pick the best one. The PNG metadata includes the prompts and the image filename has the random seed which is useful if you want to go back and generate variations of an image.

Next Steps

Stop and Start

Since this is in a StatefulSet you can safely delete it to cease consuming the L4 GPU and save money when you don’t need it. When you create it again it will mount the same disk, so will preserve your settings and boot up faster.

# stop

kubectl delete sts stable-diffusion

# start

kubectl create -f stable-diffusion-statefulset.yaml

Copy Images

If you want to copy all the generated images to your computer, you can use kubectl cp like so:

$ kubectl cp stable-diffusion-0:/app/data/stable-diffusion-webui/outputs .

Update Config

If you change the config in the ConfigMap, you can update it and redeploy the StatefulSet like so. The restart is needed, as Kubernetes won’t automatically pick up the changes to the ConfigMap.

kubectl replace -f stable-diffusion-config.yaml

kubectl rollout restart sts stable-diffusion

Troubleshooting

While modifying the run script, you might encounter issues that prevent it from running properly resulting in a crashed container, and eventually a CrashLoopBackoff status. To debug, change the command to the sleep command so you can exec in and tweak the run script to get it right. Then copy back your changes into the ConfigMap.

command: ["sleep", "infinity"]

Modify the live state

To directly modify the installation (including downloading additional models via the command line), rather than editing the config in the StatefulSet you can exec in. Here’s an example to download a couple of “steampunk” Loras to further style the images (you can bake this into the setup script too, of course).

$ kubectl exec -it stable-diffusion-0 -- bash

# cd /app/data/stable-diffusion-webui

# ls

# cd models/Lora

# curl -L "https://civitai.com/api/download/models/75592?type=Model&format=SafeTensor" > SteampunkSchemat

icsv2-000009.safetensors

# curl -L "https://civitai.com/api/download/models/102659?type=Model&format=SafeTensor" > "SteamPunkMachineryv2.safetensors"

# exit

Share

To share with more people, there’s a few options:

Gradio share

Update the webui-user.sh config to add --share.

export COMMANDLINE_ARGS="--xformers --share --listen"

Redeploy the ConfigMap and restart the StatefulSet as above. Then look at the logs for your share link. This link is public to anyone who has the link. Since it’s a StatefulSet and the setup is preserved, this should be pretty quick. Here’s an example log with that link:

Running on public URL: https://5d3a2960e3bc389556.gradio.live

LoadBalancer

To share with the world, you can create a LoadBalancer. Just note that anyone will be able to access your server.

Suspend/Resume

The neat thing about StatefulSet is that you can suspend and resume, and pick right where you left off (including any configuraiton changes you made) simpy by deleting and recreating the statefulset.

# stop

kubectl delete sts stable-diffusion

# resume

kubectl create -f stable-diffusion-config.yaml

Troubleshooting

FailedScaleUp

GPU hardware is in high demand, and in this demo we’re using Spot GPU. It’s possible for the container to be preempted. If you get a message like “FailedScaleUp” it’s an indication that there is no capacity currently available.

“Torch is not able to use GPU”

This can indicate a driver issue. Make sure you’re running GKE 1.28 or later, as earlier versions had an older Nvidia driver. Learn more about CUDA 12 on Autopilot, and finding the current driver version.

Cleanup

To delete everything, you’ll need to remove the PersistentVolumeClaim along with the StatefulSet. This will delete the underlying disk and it’s data.

kubectl delete sts stable-diffusion

kubectl delete pvc sd-pvc-stable-diffusion-0

kubectl delete svc sdWhat’s next?

So that’s Stable Diffusion on GKE. Pretty neat, and you can easily stop and start it while keeping your work. It’s basically an on-demand WebUI for stable diffusion running in the cloud.

Have you been inspired to build your own startup around Stable Diffusion? A few have already, and you can see how powerful this tech is as a starting point, and how open this genai revolution really is.

If you’re going to build a product around Stable Diffusion you’ll almost certainly want a different setup. Hosting Stable Diffusion as a service with RayServe might be one way to go.